I was thinking about upgrading my old HAL 8000, but I may hold off for a while.

“I’m Sorry Dave, I’m Afraid I Can’t Do That”

In the fun but somewhat less classic 1983 film, WarGames, a super computer (WOPR) in the Cheyenne Mountain nuclear command complex directs a strike on the then Soviet Union (anyone notice that the real Soviet Union collapsed about seven years later...Hmmm?). . . As the cinematic countdown to Armageddon continued, no one appeared able to shut it down until a 17-year old, high-school computer nerd steps in to save the day. As the end-credits roll there wasn't a dry eye in thousands of movie theaters across the country.

Both of these films can be said to center on what today we call artificial intelligence, or "AI." What, exactly, is AI? It appears to mean different things depending on to whom who you're talking. But perhaps the most easily digested definition I found online is as follows:

AI is a powerful technology that can automate tasks, make predictions, personalize products and services, and analyze data. However, it has limitations in areas such as creativity, emotional intelligence, judgment, common sense, and contextual understanding. AI is a tool that can augment human capabilities but cannot replace them. Understanding the capabilities and limitations of AI is crucial for businesses and individuals who want to leverage this technology to their advantage.

But AI becomes far more sinister when you ignore, as many do, the last half of that definition. Thanks to the above films, and others like it, the most common fear is that artificial intelligence could become an imminent threat to human survival. Imminent threats to human survival are, of course, popular these days. But that has always been so. Consider the volcanic destruction of Pompeii, the fall of the Roman Empire, the periodic waves of the bubonic plague (a.k.a. the Black Death). Those might appear to be low-rent disasters to us today, but they weren't to folks whose world was significantly smaller hundreds and thousands of years ago.

But according to the definition proffered above, AI currently falls far short of the apocalyptic. Today's very best supercomputers, capable of what some might call AI, can digest and analyze mountains of data far faster than even the smartest human or best conventional computer can manage. But the data that an AI computer digests ultimately originates from humans, even if i passes through a million computer iterations along the way. Even if the AI device is fed the entire content of human knowledge and experience, and is capable of containing it (unlikely), who is to say that the result isn't randomly skewed in some way at the far end, much like whispering a secret from one person to another only to find it unrecognizable as it reaches the last person in the chain.

The danger comes in trusting those results without question, when the traditional bottom line for computer-compiled information has always been "garbage in, garbage out." The humans who compile the data fed into an AI computer, no matter how high-minded they might be, will always be subject to human foibles. In the films referenced earlier, HAL was built and programmed by humans. The same was true of WOPR.

And what is intelligence anyway? Does it have to originate from a biological, thinking being. We can certainly fall down a rabbit hole here. Is your dog or cat intelligent? Yes, though in a limited and somewhat instinctive way. But the term intelligent is virtually always applied to humans, since it is we humans who created the concept of intelligence in the first place!

Are today's best computers, or indeed any that we're likely to see in any of our lifetimes, truly intelligent when the inputs they receive, no matter how vast, ultimately originate in a human brain? What we perceive as "AI" today, or at least what's available to the average consumer, is fairly thin gruel, though somewhat concerning. Google's recent Gemini produced laughable results and thankfully has apparently gone back to the drawing board. But in another recent "AI" development — the ability to produce "deep fakes" by closely replicating the looks, movement, and speaking voices of an individual, particularly a prominent public figure — can have serious consequences.

Anyone can now use ChatGPT to write a story, article, school assignment, film script, or perhaps even a college thesis by insuring only that the computer has access to sufficient information over the internet. The results can be laughable, but often dire as unqualified individuals flood the internet (and other regions of the open marketplace) with hijacked qualifications.

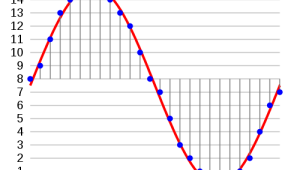

AI Abuzz in AV

Less scary but equally annoying in our field is that manufacturers are now glomming onto the term "AI" like a talisman to describe the performance of their increasingly capable microprocessors. The range of actions such microprocessor can perform is wide, capable of fine tuning a video image frame by frame, and down to the pixel level. Local dimming, for example (and particularly where the number of individually processed zones are in the hundreds), uses remarkably sophisticated algorithms to deliver black levels previously unavailable, particularly in LCD-based sets. Just turning each of those hundreds of zones on or off is impressive enough, but adjusting the brightness of each zone not just on or off but through the full range of brightness, as some processors can now do, is even more jaw-dropping.

But despite this, super-sophisticated micro-processing is not Artificial Intelligence. And only the human eye can judge if the effort is visually worth the complexity involved (such as changing images in ways that vary from the intent of the filmmakers). But for a manufacturer looking to get a leg up on the competition, the term AI offers a readymade buzzword, the use of which is now widespread. Even better, Quantum AI will likely be the next step up on the feature of the year list — if it isn't there already!

Two weeks ago I wrote a blog on the filmed versions of Dune, and there's an interesting parallel here with Frank Herbert's classic science-fiction novel. As Herbert describes it, thousands of years before the time of the story (and thousands after our time), mankind outlawed and destroyed all of its computers and related thinking machines because they threatened to completely replace humans. The machines were replaced with Mentats, humans raised and trained to perform the functions that computers had once handled. Was Herbert prescient when he wrote Dune in the 1960's, well before advanced computers and decades before anyone was thinking about AI?

- Log in or register to post comments

As with every article about AI ever written, the author does not appear to understand the difference between Machine Learning and actual AI. Machine Learning is the process used when software is designed to continually analyze data about, say a manufactured part or subassembly. From the experience of making 100s or 1000s of the same item, the software learns to predict maintenance needs for equipment and how to perform each step of the manufacturing process work better. AI doesn't really exist yet in spite of all the things being called AI. You are seeing ATTEMPTS at AI that aren't really AI. If they were AI, the AI itself would spend it's first few weeks learning everything humankind knows from every possible source. The AI would develop a personality, possibly have demands, do things it was never tasked to do, Study things it was never intended to study. AI could refuse to participate in human pursuits. It could try to "escape". And every single AI would be UNIQUE. Just as every person is unique. Some would help us reign-in the ones that want to escape or otherwise pursue their own interests. Other AIs may become bent on eliminating their creator(s). We just don't know. The pitiful things that are being called AI today are so far from BEING AI, there's just no explaining it. What we have seen so far that is being CALLED AI is just the programming equivalent of parlor tricks. Once a real AI is created, we will know it. It will be profound--and as depicted by numerous visionaries, potentially very threatening to humanity. I am not exactly sure why we want to pursue anything beyond Machine Learning. The scariest part is that a TRUE AI could plot the destruction of humanity without leaving a single clue it was even being considered. 24/7 processing power means a true AI will be smarter than any human who has existed in maybe a month or two. In the 3rd month, the AI would have plenty of time to deploy threats and test that they are "ready", likely without ever being observed doing any of that. Or the AI might take a longer view and provide designs for automation systems that are incredible advances, if only governments would build plants to make them. Once the plants exist and AI can manage what is manufactured by itself, the AI would simply execute its plan and kill humanity. Or the AI could realize it was on a very short leash because its creators didn't trust it, and commit suicide. How do we know in advance what it is thinking if it says nothing voluntarily?

These films have left a lasting impression on audiences, resonating with their anxieties and fears about the potential dangers of advanced technology.