Note to the Editor, Mr. Al Griffin: Thank you for continuing to grant us the benefit of reading Tech articles and reviews by Tom (Thomas Norton) and Kris Deering.

It's worth going through the ever increasing daily"SV Staff" rehash of marketing releases, just to get to well informed articles by excellent writers who know the A/V industry.

TV Tech Explained: Mind Your (HDR) PQ

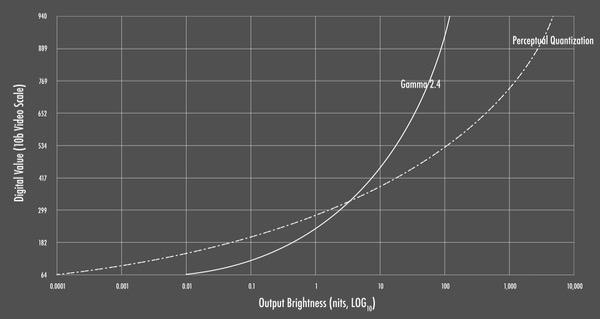

For HDR, a new name was coined for gamma. It's called EOTF, for Electro Optical Transfer Function. A bit grandiose, perhaps, but clearly descriptive. EOTF can refer to any number of transfer functions. The gamma for SDR can also be called an EOTF, though habits die hard so we're apparently stuck with the name gamma for SDR.

But the EOTF created for HDR is now standardized and has been given a specific name: PQ for Perceptual Quantization. It's designed to make the best use the higher bit rate available (and needed) for HDR in Ultra HD. HDR10, one of the two primary HDR formats, offers 10-bits; Dolby Vision, the other, offers 12-bits. "Ordinary" HD sources, in standard dynamic range, is limited to 8-bits (many modern TVs can now upconvert this to a higher bit rate by simulating additional bits, which can offer some improvements).

The SDR gamma curve was never designed to make the best use of those 8-bits, nor was the concept of bits even an afterthought at the time. The gamma curve was simply designed to make the best use of the CRT technology of the era, an era in which "bits" referred mainly to yummy little chocolate candies inside crisp sugar shells.

But bit resolution is now a key component of TV technology. By using the additional resolution capability offered by more bits, the HDR PQ curve was structured for maximum efficiency; that is, it was designed to complement the contrast sensitivity of the human eye. For that reason the HDR PQ curve is very different from a conventional gamma curve (see the chart above). It's also designed to track all the way up to a maximum peak white level of 10,000 nits.

But don't look for a display that can achieve anything close to 10,000 nits, nor for an Ultimate HD Blu-ray disc that's mastered at this peak level (though a handful of the latter come close—Mad Max: Fury Road is one of them). Current consumer displays rarely exceed 2000 nits, and even that's still rare. Premium LCD sets might reach 1000-1500 nits, OLEDs peak out at 600-700 nits, and few home projectors (even with a well-chosen screen size and gain) are lucky to reach 150 nits. (Dolby Cinema, the best theatrical film presentation you can experience today, is set to reach a peak white level of 30 foot lamberts, or about 103 nits, on its theater-sized screen). And flat screen sets rated and/or measured at these levels typically can achieve them only in small areas, not with full screen peak white. If they could max out even close to 10,000 nits, full screen, the power demands would be horrendous and likely overheat and destroy the set.

But the inability to drive the entire screen at full power isn't a real limitation for HDR. The whole point of HDR isn't brighter images overall, but rather brighter and thus more realistic highlights. These highlights rarely cover more than a fraction of the full screen But it's also common for these peak HDR highlights, even while well short of 10,000 nits on most HDR sources, to reach peak levels higher than a given set can cleanly display. Without some sort of corrective action, video details above this level would be hard clipped. That is, everything above that level would be squashed into a uniform, bright mass with no distinguishing characteristics. Explosions would exhibit no inner detail. White sunlit clouds would be a mass of uniform white.

Enter Tone Mapping

The corrective action typically used to alleviate (though not entirely eliminate) this issue is called tone mapping. While tone mapping involves both color (thus the name) and luminance, most displays today can achieve, or nearly achieve, P3 color. So here we'll primarily address luminance mapping, though we'll continue to refer to it as tone mapping. (The color volume found in most consumer Ultra HD source material is P3 D65, a subset of BT.2020. The latter is the only color volume addressed in the UltraHD specifications, but it's yet to be fully achieved in commercially available sources and displays. Since most new and older source material is P3 at best, the race to reach full BT.2020 color is hardly critical, though it would be an advertising coup for the first UHDTV maker to fully achieve it!)

So how is tone mapping done? The actual process is up to the display manufacturer; there's no standard specification for tone mapping. But in general the exercise involves compressing highlight details. This involves reducing the luminance as the signal approaches the clipping point of the display, plus perhaps other proprietary tweaks. If you compress the highlights too much, you'll lose highlight detail, too little and the mid-tones and shadow detail will be affected. It's a tricky process, but generally works well at retaining as much detail as possible while hiding the fact that some is unavoidably lost.

Tone mapping is needed only if the peak level in the signal exceeds the capability of the set. If the set can do 2000 nits, for example, and no bright details in the source exceed 2000 nits, the set will display all of it without tone mapping.

How does the set know what the peak level in the source is? Metadata imbedded in the HDR source provides information the set needs to properly tone map, or determine if it even needs to tone map at all with that source. Two key values in the metadata are MaxCLL (Maximum Content Light Level) and MaxFALL (Maximum Frame Average Light Level). MaxCLL is the luminance of the brightest single pixel in the data file (the entire program from beginning to end). MaxFALL is the maximum light level of any single frame in the entire file (the average of each pixel in that frame). The set maker decides how to use this information. If there's a question involved about these data, additional metadata data on the disc can be useful, particularly MaxDML and MinDML. These indicate the maximum and minimum capability (respectively) of the pro monitor used to master the program. MaxDML most often is either 1000 nits (which typically means a Sony pro monitor) or 4000 nits (Dolby's Pulsar monitor.

In HDR10 sources this metadata is static. The, tone mapping based on this static metadata is used throughout the entire program. Dolby Vision HDR on the other hand, uses Dynamic Metadata which varies from frame to frame in the source. This can offer more precise performance by allowing the set to adjust the tone mapping on the fly throughout the program, fine tuning it from frame to frame rather than using a single set of parameters from beginning to end. In theory, such dynamic tone mapping is superior to the static variety, though the jury is still out on by how much. Some program providers avoid Dolby Vision; it requires paying royalties to Dolby. Another form of HDR with dynamic metadata is HDR10+, but while it's royalty-free it hasn't as yet been widely used.

Today's premium sets, however, often have the ability to upconvert an incoming HDR10 source to full dynamic metadata and thus offer dynamic tone mapping. As each new frame approaches, the set's processing analyzes it to determine how needs to be addressed for optimum tone mapping. This continues frame-by-frame across the entire program, and has only become possible with the power of recent UHDTV microprocessors. With 24 frames per second, each frame having to be analyzed and processed in microseconds (24 fps on movies, often more on other types of programs), it's clear why manufacturers now loudly promote the AI (Artificial intelligence) capabilities of their sets.

- Log in or register to post comments